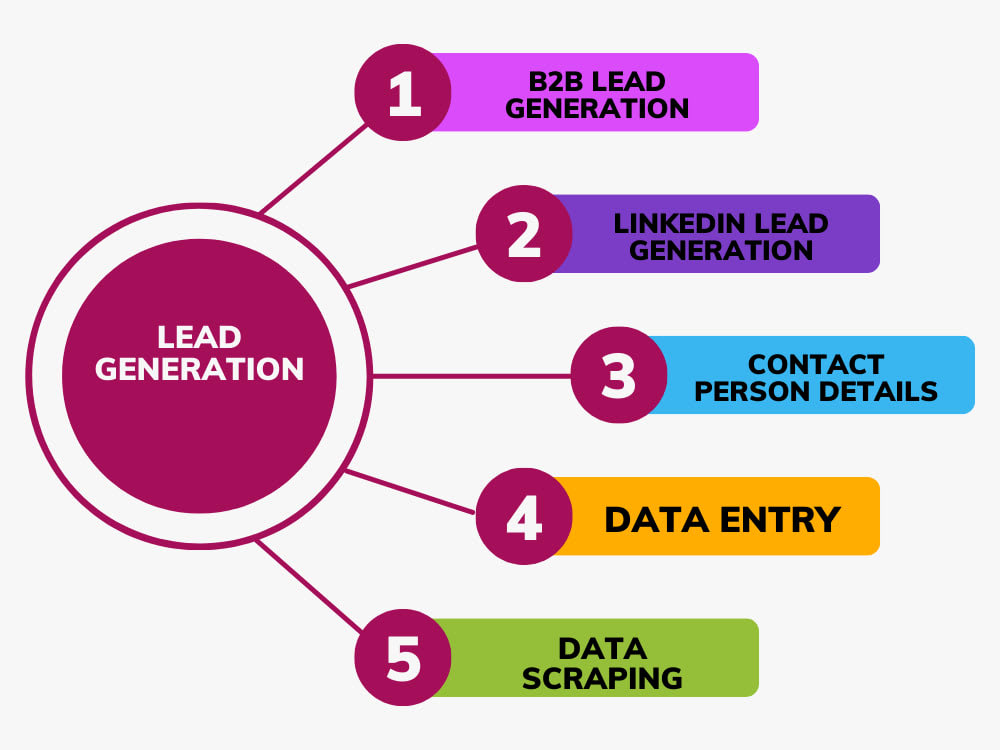

25+ Excellent Huge Data Statistics For 2023 As organizations remain to see huge data's immense worth, 96% will certainly aim to use experts in the area. While going through various huge data stats, we found that back in 2009 Netflix invested $1 million in improving its recommendation formula. What's much more fascinating is that the business's allocate innovation and advancement stood at $651 million in 2015. According to the current Digital report, internet users spent 6 hours and 42 mins on the web which plainly highlights fast large information development. So, if each of the 4.39 billion web customers spent 6 hours and 42 minutes online each day, we've spent 1.2 billion years on-line. While firms invest a lot of their Big Data budget plan on transformation and innovation, "protective" financial investments like price financial savings and compliance use up a better share yearly. In 2019, just 8.3% of financial investment decisions were driven by protective issues. In 2022, protective actions composed 35.7% of Big Information investments. Information is just one of the most useful properties in the majority of contemporary companies. Whether you're an economic services firm using data to battle economic criminal offense, a transportation firm looking for to reduce ...

- Farmers can use data in yield predictions and for choosing what to plant and where to plant.Because huge data plays such a critical duty in the contemporary service landscape, let's examine some of one of the most crucial large information statistics to identify its ever-increasing significance.For artificial intelligence, projects like Apache SystemML, Apache Mahout, and Apache Flicker's MLlib can be useful.

La-z-boy Converts Analytics Right Into Organization Value

There were 79 zettabytes of data created worldwide in 2021. For questions associated with this message please contact our assistance team and provide the recommendation ID listed below. As an example, Facebook accumulates around 63 distinctive items of information for API.Big Data trends that are set to shape the future - Telefónica

Big Data trends that are set to shape the future.

Posted: Sun, 23 Apr 2023 07:00:00 GMT [source]